Recently I wanted to experiment with a project that required a language model, but I realized that ChatGPT API was paid, even though I was a paid user. Then, while searching for “how to run a LLM locally”, I came across a very easy way to do it locally and free of charge. I will show you how to run LLM locally on your machine.

Table of Contents

Open Table of Contents

How to Run LLM Locally

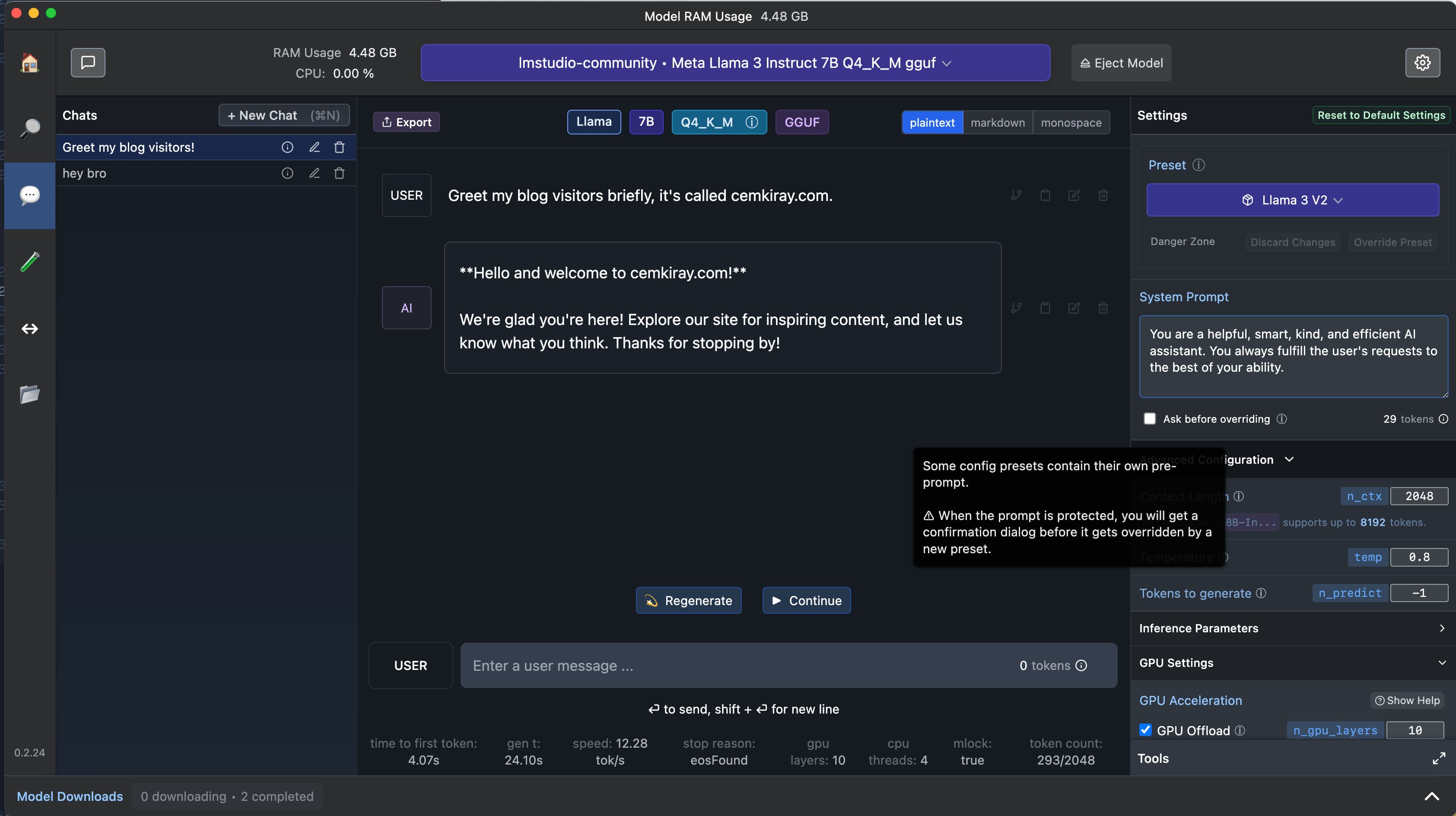

To run LLM locally, we can use an application called LM Studio. It is a free tool that allows you to run LLM locally on your machine. It supports multiple models from Hugging Face, and all operating systems (you can run LLMs locally on Windows, Mac, and Linux).

- Download and install the latest version from: https://lmstudio.ai/

- Select and install a LLM model from the list of available models. See Which LLM Model Is Best for You? section for more information about which LLM model to choose.

- On the top bar, select the model you installed to load it.

- After the model is loaded, go to ”💬 AI Chat” tab.

- Here, you will have a chat interface, similar to ChatGPT app, where you can interact with the model. You can type your message and press “Enter” to get a response from the model. Also you can experiment with the model by tweaking some parameters, such as “System Prompt”.

Which LLM Model Is Best for You?

The best LLM model for you depends on your use case and your hardware. You can make a Google search to find the best LLM model for you, for example I searched for “M1 Pro Max LLM models” to find the best LLM models for my MacBook Pro (M1 Pro Max). I have tried these two models so far, they both perform well on my machine:

- Llama 3 V2 (

lmstudio-community/Meta-Llama-3-8B-Instruct-GGUF/Meta-Llama-3-8B-Instruct-Q4_K_M.gguf) - Mistral Instruct (

TheBloke/Mistral-7B-Instruct-v0.2-GGUF/mistral-7b-instruct-v0.2.Q3_K_M.gguf)

How to Run a Free LLM API Locally

LM Studio also provides a free LLM API that you can run locally on your machine. Here’s how you can do it:

- Go to “Local Server” tab in LM Studio.

- On the top bar, select a LLM model to load, if you haven’t already.

- After the model is loaded, click on “Start Server”.

- It will start a local server on your machine, and you will get an API endpoint that you can use to interact with the model. You can see examples of how to interact with the API in the “API Examples” section. You can copy the example code to get started.

Conclusion

If you want to experiment with a LLM locally and for free, like I did, it is very easy to run it on your machine using LM Studio. I can now experiment with different LLM models easily. I hope this guide helps you to run LLM locally on your machine as well. If you have any questions, feel free to hit me up through the links in the footer.

Check out “About My Blog” to find out more about me and this blog.